We all have heard the saying “Bad Apple Ruins The Bunch”.First Bad Version is a problem that beautifully illustrates the same. Today we have a problem which is First Bad Version. One of the interns has made an nth bad commit due to which commits from n+1 have all been facing merge conflicts. The task in hand is pretty simple. All we have to do is “search” for the first bad commit that leads to the downfall of our software. However, we can only check if ith commits isBadVersion() and we have very limited calls.

Taking it from the top. Let us look at a test case

Input

n=6 and version=3 is the faulty commit

isBadVersion(1)=false

isBadVersion(2)=false

isBadVersion(3)=true

Helping us detect the first bad version

Output

3

Table of Contents

Approach-1

Linear Search

The first and easy approach that might come to our mind would be using a linear search.

We look through each of the elements starting from 0 one by one

Let us look at that by the code.

Implementation

Java Code For First Bad Version

public class Solution extends VersionControl

{

public int firstBadVersion(int n)

{

int ans=0;

for(int i=0;i<n;i++)

{

if(isBadVersion(i))

{ans=i;break;}

}

return ans;

}

}C++ Code For First Bad Version

// The API isBadVersion is defined for you.

// bool isBadVersion(int version);

class Solution

{

public:

int firstBadVersion(int n)

{

int ans=0;

for(int i=0;i<n;i++)

{

if(isBadVersion(i))

{ans=i;break;}

}

return ans;

}

};Complexity Analysis

The time complexity of the above approach=O(n)

Why?

- Example-We had 5 versions

- We go through each and every version until we encounter the third version and term it as bad

- In the worst case, we end up going to the last element in the search of the bad one

- Thus, leading the time complexity to O(n) i.e linear

This, however, leads to a timeout.

Approach-2

Binary Search

The linear search may give off a timeout for a few of the test cases which can efficiently be countered with the help of a binary search.

We look out for the mid element each time cutting the time involved considerably. Assuming we have 256 versions and 255th is the bad one.

The first mid=(0+256)/2=128. We check the 128th version.

- If it is bad then we go and check the previous half.

- We calculate 0+(128-0)/2=64 and repeat the process

- The version if is good we check the later half.

- We calculate 128+(256-128)/2=192 and repeat the process

We calculate the mid and check if the version is good or bad.

Let us look into the code.

Implementation

Java Code

/* The isBadVersion API is defined in the parent class VersionControl.

boolean isBadVersion(int version); */

public class Solution extends VersionControl

{

public int firstBadVersion(int n)

{

int st=1;

int en=n;

int ans=n;

while(st<=en)

{

int mid=st+(en-st)/2;

if(isBadVersion(mid))

{ans=mid;en=mid-1;}

else

st=mid+1;

}

return ans;

}

}C++ Code

class Solution

{

public:

int firstBadVersion(int n)

{

int st=1;

int en=n;

int ans=n;

while(st<=en)

{

int mid=st+(en-st)/2;

if(isBadVersion(mid))

{ans=mid;en=mid-1;}

else

st=mid+1;

}

return ans;

}

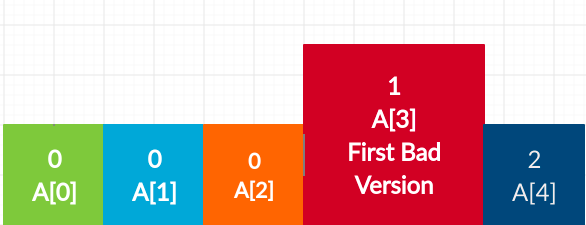

};n=5 First Bad Version at n=3

3

Complexity Analysis

Time complexity=O(log n)

- In each search depending on the search, we search in the respective half

- We are halving our search space each and every time we proceed on to the next search

- Let us assume we make k calls

- Till when do we make these calls?

- Till we have only one search element we are looking at

- Thus, 2^k=n

- Taking log both sides k=log(n)

- Thus, making the time complexity log(n)

We might consider a ternary search as well.

But in many cases, it has been proven that binary search works better than the ternary search. Thus, we should consider the above-suggested search mechanism.

Now that we have gone through the problem. Wanna check it out for more complex data structures?

Check the link below-