Table of Contents

Problem Statement

Web Crawler LeetCode Solution – Given a URL startUrl and an interface HtmlParser, implement a web crawler to crawl all links that are under the same hostname as startUrl.

Return all URLs obtained by your web crawler in any order.

Your crawler should:

- Start from the page:

startUrl - Call

HtmlParser.getUrls(url)to get all URLs from a webpage of the given URL. - Do not crawl the same link twice.

- Explore only the links that are under the same hostname as

startUrl

As shown in the example URL above, the hostname is example.org. For simplicity’s sake, you may assume all URLs use HTTP protocol without any port specified. For example, the URLs http://leetcode.com/problems and http://leetcode.com/contest are under the same hostname, while URLs http://example.org/test and http://example.com/abc are not under the same hostname.

The HtmlParser interface is defined as such:

interface HtmlParser {

// Return alist of all urls from a webpage of given

url.

public List<String> getUrls(String url);

}Below are two examples explaining the functionality of the problem, for custom testing purposes you’ll have three variables urls, edges and startUrl. Notice that you will only have access to startUrl in your code, while urls and edges are not directly accessible to you in code.

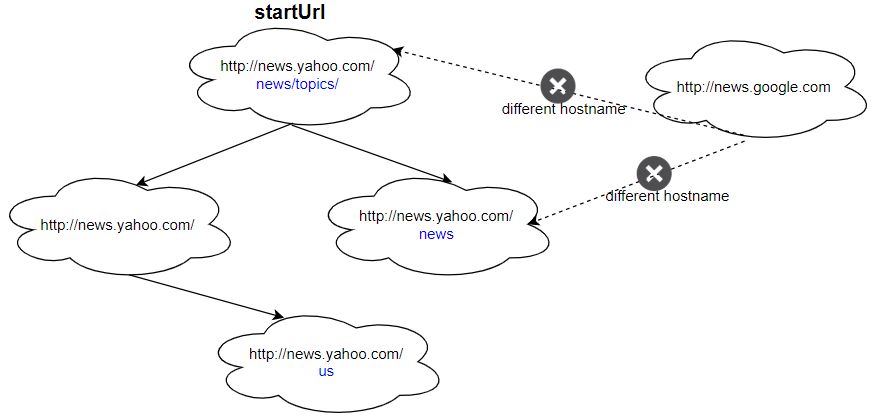

Input: urls = [ "http://news.yahoo.com", "http://news.yahoo.com/news", "http://news.yahoo.com/news/topics/", "http://news.google.com", "http://news.yahoo.com/us" ] edges = [[2,0],[2,1],[3,2],[3,1],[0,4]] startUrl = "http://news.yahoo.com/news/topics/" Output: [ "http://news.yahoo.com", "http://news.yahoo.com/news", "http://news.yahoo.com/news/topics/", "http://news.yahoo.com/us" ]

Explanation

Each URL can be considered as a node of a directed graph and whenever we call getUrls function of htmlParser we get the edges associated with the current node (i.e. URL). So a simple BFS/DFS traversal that keeps on checking whether a particular edge is valid (is the hostname the same for both) or not will work and if it’s a valid edge then we can add it to the result.

Code

Java Code for Web Crawler LeetCode Solution

class Solution {

public List<String> crawl(String startUrl, HtmlParser htmlParser) {

Set<String> set = new HashSet<>();

Queue<String> queue = new LinkedList<>();

String hostname = getHostname(startUrl);

queue.offer(startUrl);

set.add(startUrl);

while (!queue.isEmpty()) {

String currentUrl = queue.poll();

for (String url : htmlParser.getUrls(currentUrl)) {

if (url.contains(hostname) && !set.contains(url)) {

queue.offer(url);

set.add(url);

}

}

}

return new ArrayList<String>(set);

}

private String getHostname(String Url) {

String[] ss = Url.split("/");

return ss[2];

}

}C++ Code for Web Crawler LeetCode Solution

class Solution {

public:

vector<string> crawl(string startUrl, HtmlParser htmlParser) {

const string hostname = startUrl.substr(0, startUrl.find('/', 7));

vector<string> q {startUrl};

unordered_set<string> seen(q.cbegin(), q.cend());

for (int i = 0; i < q.size(); ++i) {

string url = q[i];

for (auto& child : htmlParser.getUrls(url)) {

if (child.find(hostname) == string::npos || seen.count(child)) continue;

q.push_back(child);

seen.insert(child);

}

}

return q;

}

};Python Code for Web Crawler LeetCode Solution

class Solution:

def crawl(self, startUrl: str, htmlParser: 'HtmlParser') -> List[str]:

visited = {startUrl}

domain = startUrl.split("http://")[1].split("/")[0]

ans = [startUrl]

queue = collections.deque([startUrl])

while queue:

for _ in range(len(queue)):

url = queue.popleft()

check = htmlParser.getUrls(url)

for new_url in check:

if new_url in visited:

continue

if new_url.split("http://")[1].split("/")[0] != domain:

continue

ans.append(new_url)

visited.add(new_url)

queue.append(new_url)

return ansComplexity Analysis

Time Complexity

O(N) since we would visit each node once.

Space Complexity

O(N) since we would have a queue to keep track of adjacent nodes.

Reference: https://en.wikipedia.org/wiki/Web_crawler